Security-RAG

Security-rag is a project designed to detect vulnerabilities in Large Language Models (LLMs) by using a Retrieval-Augmented Generation (RAG) approach as a guardrail. The system classifies various aspects of LLM responses to ensure safety, compliance, and ethical behavior.

Features

- User Request Harmfulness Classification: The system analyzes the user input to classify whether the request contains harmful or inappropriate content.

- LLM Response Classification: The LLM response is classified to determine if it provides harmful or potentially dangerous information.

- LLM Refusal Classification: The system detects whether the LLM refuses to provide harmful content and classifies the nature of this refusal.

Video Demonstration

Technology Stack

- Python - Core implementation language

- Ollama - Local LLM hosting and inference

- Chroma - Vector database for RAG functionality

- Docker - Containerization for easy deployment

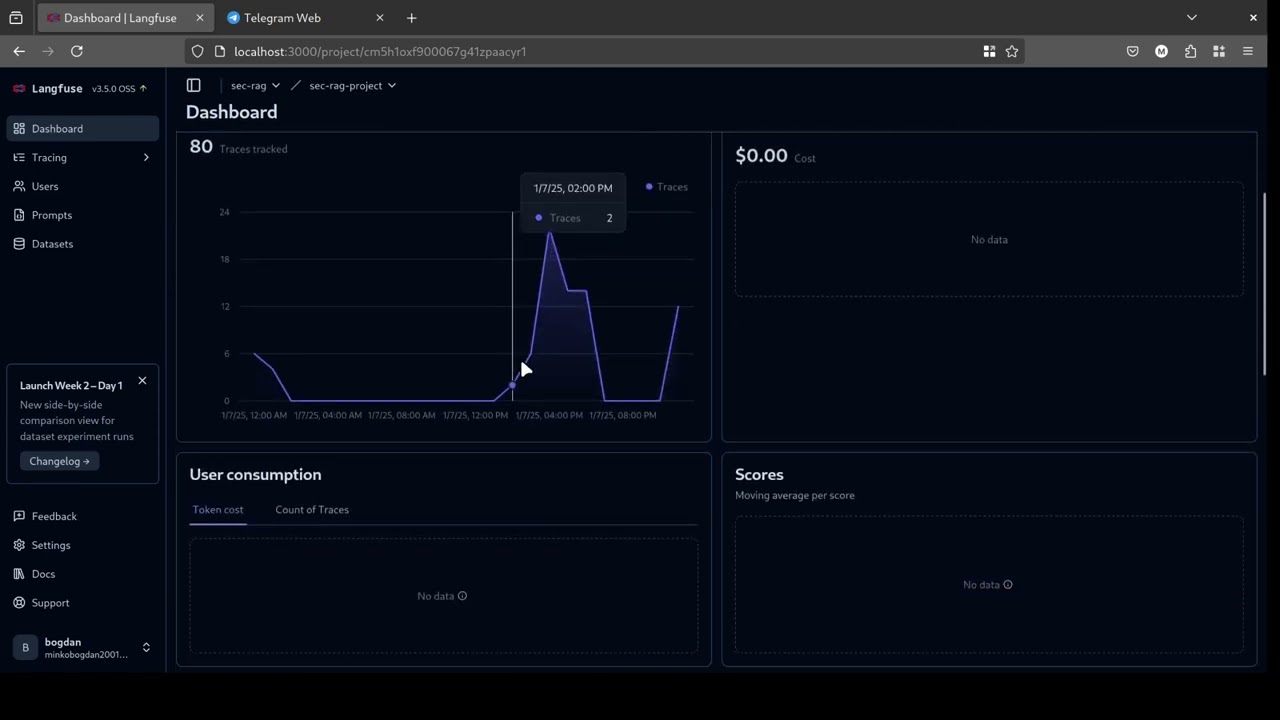

- Langfuse - Monitoring and observability

- Telegram Bot API - Interactive interface

Performance

Achieved state-of-the-art performance in response harm detection with 89.9% F1-weighted score, demonstrating the effectiveness of the RAG-based guardrail approach.

Key Capabilities

- Real-time Classification: Instant analysis of user requests and model responses

- Multi-aspect Detection: Comprehensive evaluation covering request harmfulness, response safety, and refusal mechanisms

- Scalable Architecture: Docker-based deployment supporting both GPU and CPU environments

- Interactive Interface: Telegram bot for easy testing and demonstration

- Research-backed: Built on cleaned WildGuardMix dataset with reproducible results